Exposé | The 2º Death Dance – The 1º Cover-up

Part one of an investigative report.

By Cory Morningstar

December 10, 2010

Published in Huntington News, December 18th, 2010 http://bit.ly/hV0ZAr

“If you do not change direction, you may end up where you are heading.” – Lao Tzu

Behind every tragedy there is a story. This story is non-fiction and begins in the 1980’s. It involves the Rockefeller funded Villach conferences, the entrance of the neoclassical economists, propaganda, and most importantly the disappearance of the 1ºC temperature threshold cited as the safe limit in 1990 by the United Nations Advisory Group on Greenhouse Gases.

Like so many stories there was a villain. In this story, the villain’s name was ‘Insatiable Greed’ – like the Agent Smith virus, uploaded into ‘the Matrix’, Insatiable Greed was a terminating virus capable of multiplying.

Insatiable Greed was warned by the United Nations working group that “ beyond 1ºC there might be “rapid, unpredictable and non-linear responses that could lead to extensive ecosystem damage.” He was also warned that a 2ºC increase was “viewed as an upper limit beyond which the risks of grave damage to ecosystems, and of non-linear responses, are expected to increase rapidly.” Non-linear in this case means runway climate change.

Earth and her inhabitants would clearly be threatened if the 1ºC threshold was to be exceeded. However, Insatiable Greed, did not listen. Insatiable Greed dismissed the wise advice – only interested in knowing how much further destruction could be continued before the Earth would reach her maximum limit where catastrophe would be unavoidable. Being the virus he was, Insatiable Greed used the tools available to successfully infect and multiply – effectively burying the initial warning of 1ºC, thus ensuring the Earth’s inhabitants would believe the extremely dangerous threshold of 2ºC would be safe. This is the story.

The Origins of 2ºC – Neoclassical Economist Bill Nordhaus

The 2ºC temperature rise “target,” which is the only limit in the text of the ‘noted’ Copenhagen Accord, may well be one of the least understood cover-ups in history. The first suggestion to use 2° Celsius as a critical temperature limit for climate policy was not made by an esteemed climate scientist. Rather it was made by well-known neoclassical economist, W.D. Nordhaus, in a discussion paper of the prestigious Cowles Foundation. In 1977 Nordhaus stated: “If there were global temperatures more than 2 or 3° above the current average temperature, this would take the climate outside of the range of observations which have been made over the last several hundred thousand years.”[1]

This temperature increase is in fact well outside of the natural limits of the past 10,000 years during which agriculture and civilization developed. It is also higher than has existed over the past couple of million years.

“A rich man’s cat may drink the milk that a poor boy needs to remain healthy. Does this happen because the market is failing? Not at all, for the market mechanism is doing its job – putting goods in the hands of those that have the dollar votes.”

The author of the ice cold quote above is none other than our neoclassical economist and Yale University Professor Bill Nordhaus (Nordhaus and Samuelson, 2005), originator of the now infamous 2ºC threshold target that has come to dominate climate discussions and to dismiss all sensibilities as our Earth spins toward a terrifying, irreversible apocalypse.

Today, this 2ºC target, largely defined as the maximum allowable warming to avoid dangerous anthropogenic interference in the climate has replaced an almost unknown 1ºC target highlighted in the 1990 UN AGGG (United Nations Advisory Group on Greenhouse Gases) report when climate change as a global issue was widely unknown.

Nordhaus has been one of the most influential economists involved in climate change models and construction of emissions scenarios for well over 30 years, having developed one of the earliest economic models to evaluate climate change policy. He has steadfastly opposed the drastic reductions in greenhouse gases emissions necessary for averting global catastrophe, “arguing instead for a slow process of emissions reduction, on the grounds that it would be more economically justifiable.” In a brilliant review by Richard York, Brett Clark and John Bellamy Foster, the frightening “logic” of Nordhaus is fully exposed.

The Origins of 1ºC – United Nations 1990

“…[B]eyond 1 degree C may elicit rapid, unpredictable and non-linear responses that could lead to extensive ecosystem damage.”

– United Nations Advisory Group on Greenhouse Gases

In 1986, three international bodies, the World Meteorological Organisation (WMO), the United Nations Environment Programme (UNEP) and the International Council of Scientific Unions (ICSU), who had co-sponsored the Villach Conference in 1985, formed the Advisory Group on Greenhouse Gases (AGGG), a small international committee with responsibility for assessing the available scientific information about the increase of greenhouse gases in the atmosphere and the likely impact.

In 1990 the AGGG calculated what level of climate change our planet could tolerate, also referred to as “environmental limits.” These levels and limits were summarized in the document, “Responding to Climate Change: Tools For Policy Development,” published by the Stockholm Environment Institute.

The targets and indicators set limits to rates and total amounts of temperature rise and sea level rise, on the basis of known behaviour of ecosystems. The AGGG report identified these limits in order to “protect both ecosystems as well as human systems.” The report states that the objective is: “stabilization of greenhouse gas concentrations in the atmosphere at a level that would prevent dangerous anthropogenic [human made] interference with the climate system.”

It adds: “Such a level should be achieved within a timeframe sufficient to allow ecosystems to adapt naturally to climate change, to ensure that food production is not threatened and to enable economic development to proceed in a sustainable manner.” Thus the report requires limits to both the total amount of change and the rate of change.

Further, they warned that a global temperature increase “beyond 1 degree C may elicit rapid, unpredictable and non-linear responses that could lead to extensive ecosystem damage.” A temperature increase of 2ºC was viewed as “an upper limit beyond which the risks of grave damage to ecosystems, and of non-linear responses, are expected to increase rapidly.” [For “non-linear,” read “runaway global climate change.”][2]

The Framework Convention on Climate Change signed at the Rio Earth Summit in 1992 should have ensured that staying within the AGGG-identified ecological limit of a 1ºC temperature rise is a central objective. But it didn’t. This investigation attempts to spell out why.

From the AGGG report. The low risk indicators:

Sea level rise

· maximum rate of rise of 20–50 mm per decade

· maximum total rise of 20–50 cm above 1990 global mean sea level

Global mean temperature

· maximum rate of increase of 0.1ºC per decade

· maximum total increase of 1.0ºC

The AGGG report also identified the CO2 [equivalent] concentrations corresponding to these as 330 – 400 ppm for 1ºC and 400 – 560ppm for 2ºC. It is critical to understand these concentrations cited from the report are far below the 350 ppm that has become the status quo target of today.

Citing a need for climate stabilization, a Dutch Ministry of Environment funded project concluded in 1988 that this would preferably be at just 1ºC above pre-industrial temperatures, but certainly with a maximum target of 400 ppm CO2 concentration (Krause, 1988).

Further Origins of 2ºC | Environmental Defense Fund & Rockefeller

Altering the framing of the climate change issue

Cost benefit analysis (CBA) is used like a bible to convey and perpetuate an image of science being driven by facts as opposed to politics. The high risk 2ºC target does not take note of the fact we are beyond dangerous climate interference today, and that even 1ºC represents a immediate danger today, rather it represents the cost-benefit view. This view is to justify the limit by comparing benefits of avoiding climate damages – with costs of reducing economic growth. In this case instead of the benefit being ‘the benefit of staying alive’ – the benefit is expressed as percentage points of Gross Domestic Product (GDP).

Further origins of 2ºC trace back to a traffic light system in the early 1990’s[4] as well as discussions within the Bellagio workshops held in Europe in 1987. Gordon Goodman, an AGGG member and Executive Director of the Beijer Institute (later, the Stockholm Environment Institute) received money from the Rockefeller Brothers Fund to commission two inter-linked workshops in September and November 1987 at Villach and Bellagio respectively. On 28 September 1987, the first of two workshops convened in Villach, Austria.[3]

In the report it states that the workshops were carried out under the auspices of AGGG. The report states that AGGG members had limited input and control over these workshops. It is reported that papers contributed by twenty scientists, economists, and policy experts provided background material for working sessions where some fifty scientists and other technical experts from fourteen developed and developing countries examined the consequences of the emissions of greenhouse gases, with measures that might be used to limit or adapt to these effects.

It is stated that the objective of the Villach workshop was to provide a technical basis for deliberations by a group of high-level policy makers which convened subsequently at Bellagio, Italy, on November 9th, 1987. The said findings and the recommendations of the Bellagio workshop appear in a report titled “Developing Policies for Responding to Climatic Change” (Jaeger, 1988) published by the World Meteorological Organizational and the United Nations Environment Programme in April 1988.

The project to organize the two workshops was initiated by the Beijer Institute (for whom Bill Nordhaus has written numerous papers), the Environmental Defense Fund (U.S.) and the Woods Hole Research Center (U.S.). A steering committee included Michael Oppenheimer from Environmental Defense Fund while sponsorship was provided by a small handful including: the Rockefeller Brothers Fund (U.S), the German Marshall Fund (U.S.); Rockefeller Foundation (U.S.) and the Beijer Institute.

This workshop was supported by AGGG and behind them the Stockholm Environment Institute (SEI). The Bellagio conference proposed that policymakers should set a maximum rate of temperature increase at 0.1ºC per decade as the maximum tolerable limit and a second target, and a 1ºC – 2ºC above preindustrial temperature target. This appears to one the earliest references to a 2ºC maximum temperature change (along with rate of change) from a working group. The underlying principle for the rate of change target was that of the adaptability of ecosystems to climatic change, whereas in the case of the temperature target it was the boundary between changes that could be accommodated without serious or costly implications.

However, the goal of 0.1 °C per decade could only be achieved “with significant reductions in fossil fuel use” – World Climate Programme, 1988, p. 24, (emphasis in original)

Investigation into these workshops raises many questions. It has been suggested that the Villach conference was critical to altering the framing of the climate change issue. It was known at that time that to reach the 0.1°C per decade target, the rate of CO2 emissions could have to be reduced by up to 66%.

Rockefellers were now embedded with those who would be addressing policy change. Such policy, yet to be written would be the principle means of effectively ending global societies addiction to fossil fuels – which would effectively destroy the Rockefeller empire built on oil.

From 1968-1978, Frederick Seitz was president of Rockefeller University as well as chairman of the board of directors for the Science & Environmental Policy Project – a project set up to advocate global warming skeptism. Seitz was also the founding chairman of the George C. Marshall Institute, a tobacco industry consultant and a prominent skeptic on the issue of global warming.

In what must be the most bitter of ironies, the 2°C limit reemerged in 1990, in the same influential UN AGGG paper where the 1ºC is cited, 2ºC was also cited as being a high risk target. [Rijsberman, Swart, 1990] We can only assume that pressure from outside and within the negotiations – from governments influenced and controlled by both corporations and economists must have played a crucial role in killing off the 1ºC suggested temperature rise the world was warned not to surpass.

Psychotic Delusion

In an address titled “Economic Issues in Designing a Global Agreement on Global Warming” presented by Nordhaus to the Copenhagen Conference on Climate Science (10-12 March 2009), Nordhaus argues that it is not legitimate to try to second guess the market, going so far as to propose that people may “come to love the altered landscape of the warmer world.” It is most revealing that the world’s foremost scientists on climate change who understand the terrifying risks we now face due to lack of action invited one the most influential voices calling for caution and moderation to give a keynote address.

Nordhaus uses his own magical economical model to spit out the very best apocalyptic scenario he would like our governments to adopt – one, in Nordhaus’s skewed view, that strengthens the economy. If the world does nothing, Nordhaus believes, we will reach a temperature increase of 3.1ºC by 2100 (a gross underestimate by most standards) and 5.3ºC by 2200. Instead, he suggests, the world should choose to spend what allows the temperature to rise by 2.6ºC by 2100, increasing to 3.5ºC by 2200 (keep in mind that any temperature increase will eventually double for future generations due to the inertia of the climate system). He presents this scenario delusion, which supports atmospheric concentrations rising to approximately 700 ppm, despite the fact that scientists claim such temperature increases will be catastrophic beyond measure. Hans Joachim Schellnhuber, director of the Potsdam Institute for Climate Impact Research states “Our survival would very much depend on how well we were able to draw down carbon dioxide to 280 ppm”.

To Nordhaus – his numbers are nothing less than an epiphany – rather than doing nothing at all, we can save the world 3 trillion dollars. He insists that any attempt to stay below 2.6ºC – accepting that this is an eventual rise to 5.3ºC – would be a big mistake. Wassily Leontief and John Kenneth summarized this delusional train of thought quite strikingly: “Departments of economics are graduating a generation of idiot savants, brilliant at esoteric mathematics yet innocent of actual economic life.” Of course, this description does not apply to all economists and there are the mavericks such as Kenneth Boulding, Howard Odum, Hazel Henderson, E. F. Shumacher and Herman Daly to name a few.

In a 1997 paper, Linking Weak and Strong Sustainability Indicators: The Case of Global Warming, the authors define the neoclassical delusion as follows: “In several contributions, damage cost calculations of climate change like that of Nordhaus (1991), Cline (1991) and Fankhauser (1995) were criticized especially from an ecological perspective. It had been argued that mere neoclassical optimisation concepts tend to ignore the ecological, ethical and social dimension of the greenhouse effect, especially issues of an equitable distribution and a sustainable use of non-substitutable, essential functions of ecosystems.

The ecological argument addresses the use of damage cost values for computing optimal levels of emission abatement neglecting the special function of the atmosphere as a sink for greenhouse gases. This function is absolutely scarce and essential for the global ecosystem. It is feared that, by putting certain monetary values on this essential natural function, politicians may be encouraged to ‘sell it’ in exchange for goods being possibly of higher value in a short time horizon (e.g. income).”

Science is Politics | Politics is Science

In the 1980’s, although there was scientific uncertainty in regards to the precise time, location and nature of particular impacts, scientists stated it was inevitable that in the absence of major preventive and adaptive undertakings by humanity, significant and potentially disruptive changes in the earth’s environment would occur and that human-induced climate change would have profound consequences for the world’s social and natural systems.

Responding to these dire warnings, the redesigning and innovation towards decarbonization of energy systems comprised just one of several preventive measures that found itself moving up on the policy agenda towards the late 1980s. This was a direct result of the active policy engagement by a range of scientific institutions. The urgent scientific call for global action to prevent potential climate catastrophe opened the door to the global politics of the climate.

Intergovernmental negotiations soon transformed the vision of prevention into a more restricted mitigation/adaptation agenda. By 1992 it is clear that influential interest groups and powerful institutions had become heavily involved in the negotiations, including the Organisation for Economic Co-operation and Development (OECD), OPEC countries, oil-importing developing nations and private industry/corporations. The OECD has been heavily criticized by several civil society groups as well as developing states. The main criticism has been the narrowness of the OECD on account of its limited membership to a select few wealthy states.

In 1997–1998, the draft Multilateral Agreement on Investment (MAI) was heavily criticized by developing states. Many argued that the agreement would threaten protection of human rights, labor and environmental standards, and the most vulnerable countries. Critics argued (correctly) that the MAI would result in a ‘race to the bottom’ among countries willing to lower their labor and environmental standards to attract foreign investment. The OECD’s actions against harmful tax practices has also raised criticism. The primary objection is the sanctity of tax policy as a matter of sovereign entitlement. Saudi Arabia played a key role interfering, and shaping international climate policy since international negotiations began in the 1990’s in order to ensure that the billion dollar oil industry would not be affected.

As a consequence of such interference by many powerful players who sought to ensure the economic and political power structure would not be threatened, adaptation surfaced as the primary goal in international climate science and policy, effectively replacing the goals of prevention and mitigation from the 1980’s.

By 1992, unwilling to sacrifice economic growth, negotiating parties rejected strict targets and timetables for emissions reductions, rather, through the United Nations Framework Convention on Climate Change (UNFCCC) countries avoided the necessary targets and trajectories by instead agreeing to stabilize greenhouse gas emissions into the atmosphere at a level that were to prevent dangerous human interference with the climate system.

The result would be a vicious race to the bottom. Science became politics. Politics became science.

Which brings us to our current climate crisis we now face dead on.

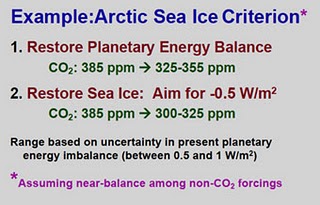

A recent and revealing example of questionable politics within science can be found in Hansen’s recent papers. Although the Arctic sea ice is diminishing faster than what scientists ever thought possible, Hansen recently revised the number required to refreeze the Arctic sea ice upwards – from 300-325 ppm to 300-350 ppm. Considering we have feedbacks which are now operational, this revision can be hard to believe. This is a brilliant example of questionable politics within a realm of brilliant scientists. One could argue that as we are at 390 CO2 today – the melting of the ice in the Arctic could be caused by being over 350 ppm. To date, this upward revision has gone relatively unchallenged. The first Hansen paper published establishing 300-325 ppm for the Arctic sea ice was published prior to the creation of the brand 350.org. When McKibben and Hansen were criticized and asked the obvious question – why was 350.org focusing an entire campaign on the number/brand 350 – when the safe boundary for the planet was under 325 ppm, the number was revised upwards. Further, the science of Veron et al states that temperature-induced mass coral bleaching causing mortality on a wide geographic scale started when atmospheric CO2 levels exceeded 320 ppm. When CO2 levels reached 340 ppm, sporadic but highly destructive mass bleaching occurred in most reefs world-wide, often associated with El Niño events. So why aim for 350?

It is possible that the science has been adjusted to accommodate the 350.org brand? This brand has been funded by Rockefeller Brothers and others who are set to lose if the world were to do what is necessary to get back down to 300 ppm – that being a complete abandonment of fossil fuels. The abandonment of fossil fuels is the greatest threat to the current power and monetary structure in the world.

Hansen says Arctic sea-ice passed its tipping point decades ago, and in his presentations has also specifically identified 300-325ppm as the target range for sea-ice restoration (see slide image), as did the paper: Open Atmos. Sci. J. 2:217-231. This view, by perhaps the most eminent climate scientist in America, is reinforced by Hans Joachim Schellnhuber, head of the Potsdam Institute who goes further stating “Our survival would very much depend on how well we were able to draw down carbon dioxide to 280 ppm”.

2008: Hansen – Where should Humanity Aim?

A further imbalance reduction, and thus CO2 ~300-325 ppm, may be needed to restore sea ice to its area of 25 years ago.

Assessment of Target CO2

PhenomenonTarget CO2(ppm)

1. Arctic Sea Ice 300-325

2. Ice Sheets/Sea Level 300-350

3. Shifting Climatic Zones 300-350

4. Alpine Water Supplies 300-350

5. Avoid Ocean Acidification 300-350

http://pubs.giss.nasa.gov/docs/2008/2008_Hansen_etal.pdf

2010: Hansen: French National Assembly May 2010

Assessment of Target CO2

PhenomenonTarget CO2 (ppm)

1. Arctic Sea Ice 300-350

2. Ice Sheets/Sea Level 300-350

3. Shifting Climatic Zones 300-350

4. Alpine Water Supplies 300-350

5. Avoid Ocean Acidification 300-350

http://www.columbia.edu/~jeh1/2010/May2010_FrenchNationalAssembly.pdf

An excerpt from Climate Code Red: ‘350 is the wrong target: put the science first’ :

… But that is only half the story. Here’s what else Hansen et al. said (emphasis added) in their article in Open Atmos. Sci. J. 2:217-231:

“Equilibrium sea level rise for today’s 385 ppm CO2 is at least several meters, judging from paleoclimate history. Accelerating mass losses from Greenland and West Antarctica heighten concerns about ice sheet stability. An initial CO2 target of 350 ppm, to be reassessed as effects on ice sheet mass balance are observed, is suggested”

It is important to note that this paragraph is not about the Arctic sea-ice tipping point, it’s about Antarctica. Hansen explains in the same article that 350ppm is a precautionary target to stop global loss of ice-sheets, because the paleoclimate record shows 450ppm ± 100ppm as boundary for glaciation/ deglaciation of Antarctica. In the next paragraph, attention turns to the question of Arctic sea ice:

“Stabilization of Arctic sea ice cover requires, to first approximation, restoration of planetary energy balance. Climate models driven by known forcings yield a present planetary energy imbalance of +0.5-1 W/m2. Observed heat increase in the upper 700 m of the ocean confirms the planetary energy imbalance, but observations of the entire ocean are needed for quantification. CO2 amount must be reduced to 325-355 ppm to increase outgoing flux 0.5-1 W/m2, if other forcings are unchanged. A further imbalance reduction, and thus CO2 ~300-325 ppm, may be needed to restore sea ice to its area of 25 years ago.”

The central point is that Arctic sea-ice is undergoing dramatic loss in summer, having lost 70-80% of its volume in the last 50 years, most since 2000. Without summer sea-ice, Greenland cannot escape a trajectory of ice-sheet loss leading to an eventual sea-level rise of 7 metres. Regional temperatures in the Arctic autumn are already up about 5C, and by mid-century an Arctic ice-free in summer, combined with more global warming, will be pushing Siberia close to the point where large-scale loss of carbon from melting permafrost would make further mitigation efforts futile. As Hansen told the US Congress in testimony last year, the “elements of a perfect storm, a global cataclysm, are assembled”.

In short, if you don’t have a target that aims to cool the planet sufficiently to get the sea-ice back, the climate system may spiral out of control, past many “tipping points” to the final “point of no return”.

And that target is not 350ppm, it’s around 300 ppm.

***

350.org

The most powerful, most aggressive agreement on climate in existence to this day, The People’s Agreement, was written at the World People’s Conference on Climate Change and the Rights of Mother Earth in April 2010 in Cochabamba, Bolivia. 350.org fought to highlight their brand /number in the agreement. They did not succeed.

McKibben now makes reference to 350 ppm as being “the upper limit”, and in a recent radio interview McKibben stated that pre-industrial levels might be the only safe zone. In spite of his acknowledgment of pre-industrial levels being a safe zone, despite mention of the 280 ppm in a recent ‘future’ scenario written by McKibben himself, and despite pressure from climate justice groups and activists – 350.org refuses to endorse the Cochabamba People’s Agreement. This agreement is the only democratic agreement ever drafted to date that has the potential to save civilization from catastrophic climate change.

McKibben states 350.org will not endorse the agreement as Hansen does not agree with the science of 300 ppm. This is ironic as on the 350.org website it now reads:

“These considerations have led 350.org to see the 350 ppm target not only in terms of CO2, but CO2e. On a technical level, this becomes a more ambitious target, incorporating other greenhouse gases. On a practical level, it signifies the same priorities 350 has embodied all along. Any climate target lower than where we are right now—be it 350 CO2e, 350 CO2, or anything else—represents a transformative shift in how the world operates.”

In effect, 350.org, now recognizing themselves as 350 ppm eq. goes beyond the targets of even that of Cochabamba. So what is the real reason they do not endorse it? Or maybe, 350 just means whatever you want it to mean – after it all it’s just a brand under the tcktcktck corporate umbrella – and without an expedient plan to zero – it is meaningless.

John Holdren – We are already beyond dangerous

Such inaction is impossible to justify today. Leading climate scientist John Holdren on 3 November 2006:

“Climate change is coming at us faster, with larger impacts and bigger risks, than even most climate scientists expected as recently as a few years ago. The stated goal of the UNFCCC – avoiding dangerous anthropogenic interference in the climate – is in fact unattainable, because today we are already experiencing dangerous anthropogenic interference. The real question now is whether we can still avoid catastrophic anthro-pogenic interference in climate. There is no guarantee that catastrophe can be avoided even we start taking serious evasive action immediately; But it’s increasingly clear that the current level of anthropogenic interference is dangerous: Significant impacts in terms of floods, droughts, wildfires, species, melting ice already evident at ~0.8°C above pre-industrial Tavg. Current GHG concentrations commit us to 0.6°C more.” [5]

Furthermore, James Hansen has been pressing for a global climate state of emergency since 2008.

Paleoclimate scientist Andrew Glikson interviewed by Australian Broadcasting Corporation (ABC) Radio Australia, 2009, in response to the question “what has to be done?”stated: “extremely rapid reduction in emissions … I would say, 80 percent within the next ten years or so … people like me have been looking at the evidence about this on a day to day basis and we have been doing it for years, and to look in to the abyss at this length is a daunting task.”

“My view is that the climate has already crossed at least one tipping point, about 1975-1976, and is now at a runaway state, implying that only emergency measures have a chance of making a difference…” “The costs of all of the above would require diversion of the trillions of dollars from global military expenditures to environmental mitigation.” – 2010, Andrew Glikson

Part 2:

References:

[1] Nordhaus 1977, p.39-40; see also Nordhaus 1975, pp. 22-23, where the same words are to be found, excluding a suggestive diagram.

[2] Hansen: The paleoclimate record does not provide a case with a climate forcing of the magnitude and speed that will occur if fossil fuels are all burned. Models are nowhere near the stage at which they can predict reliably when major ice sheet disintegration will begin. Nor can we say how close we are to methane hydrate instability. But these are questions of when, not if. If we burn all the fossil fuels, the ice sheets almost surely will melt entirely, with the final sea level rise about 75 meters (250 feet), with most of that possibly occurring within a time scale of centuries. Methane hydrates are likely to be more extensive and vulnerable now than they were in the early Cenozoic. It is difficult to imagine how the methane clathrates could survive, once the ocean has had time to warm. In that event a PETM-like warming could be added on top of the fossil fuel warming. After the ice is gone, would Earth proceed to the Venus syndrome, a runaway greenhouse effect that would destroy all life on the planet, perhaps permanently? While that is difficult to say based on present information, I’ve come to conclude that if we burn all reserves of oil, gas, and coal, there is a substantial chance we will initiate the runaway greenhouse. If we also burn the tar sands and tar shale, I believe the Venus syndrome is a dead certainty.

[3] Reports in the early 1990s established a target-based approach for climate policy – a ‘traffic light’ system for climate risk management consisting of three colors – green, amber, and red. Green was to symbolize limited damage and risk impliying a temperature rise of less than 0.1◦C per decade. Amber symbolized extensive damage and risk of instabilities, implying a temperature rise of between 0.1 and 0.2◦C per decade. Red symbolized considerable disruption to society and possibly rapid non-linear responses, and would occur with temperature rises of above 0.2◦C per decade. Note that the margin between green and amber was also estimated to be up to a maximum temperature increase of 1◦C, while the margin between amber and red was defined at 2◦C.

[4] There are conflicting reports as to under what auspices these workshops were held. Both the Beijer Institute of the Royal Swedish Academy of Sciences and the AGGG have been cited in conflicting reports.

[5] Holdren is advisor to President Barack Obama for Science and Technology, Director of the White House Office of Science and Technology Policy, and Co-Chair of the President’s Council of Advisors on Science and Technology.

Become a Patron!

Become a Patron!

Pingback: The Real Weapons of Mass Destruction: Methane, Propaganda & the Architects of Genocide | Part I - GVConsulting